Inspiration

According to WHO, more than 2.2 billion people worldwide are vision impaired. This demographic encompasses diverse age groups and socio-economic backgrounds, creating great demand for assistive technologies, medical interventions, and inclusive services. Besides, rapid technological advancements, such as AI, further expand opportunities for accessibility. By addressing the needs of the visually impaired, businesses can not only tap into a vast market potential but also foster social inclusion and improve quality of life on a global scale.

ThirdEye is inspired by the great need for technological solutions to aid visually impaired individuals in navigating their surroundings safely and conveniently. Based on the multimodal capacity of the latest Gemini API, now AI can understand the surroundings better visually and verbally. With great market demand and technical advancement, our team decide to develop an app that can assist visually impaired individuals.

What it does

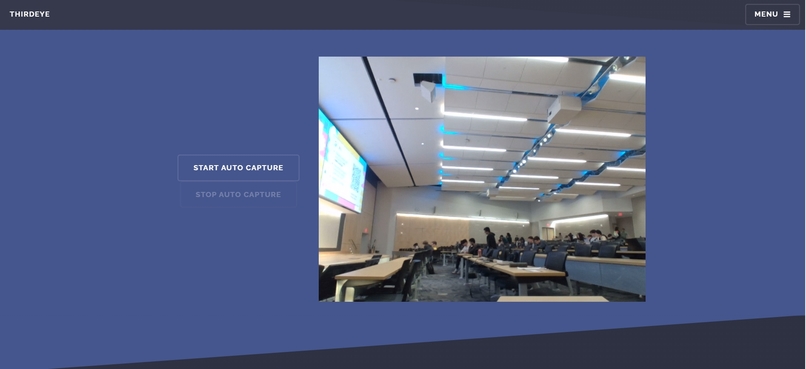

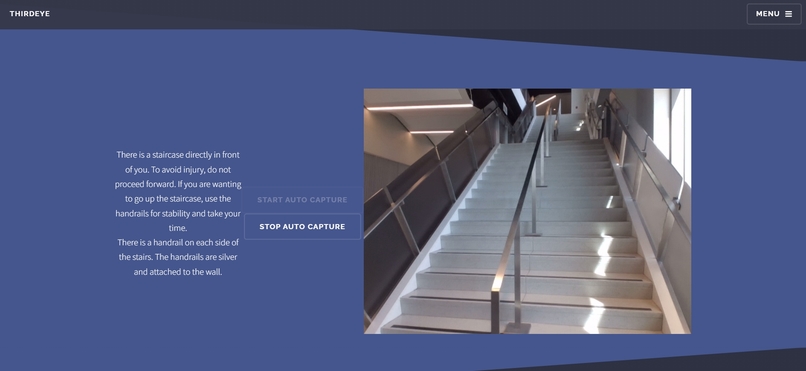

ThirdEye is a multi-functional app designed to assist visually impaired individuals, as well as anyone needing assistance in object recognition and organization. It utilizes image processing and text-to-speech technologies to provide real-time assistance in various scenarios.

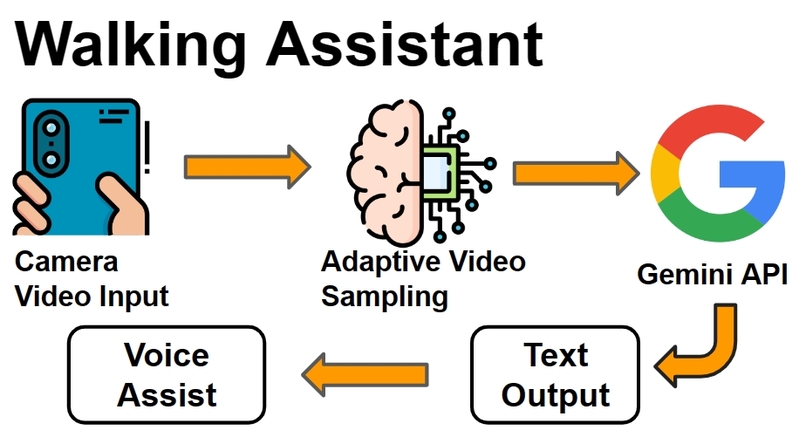

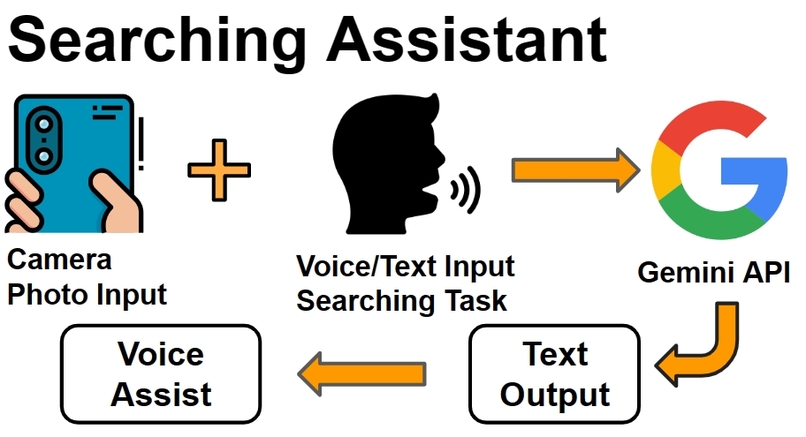

It mainly contains two modes: Walking Assistant and Searching Assistant.

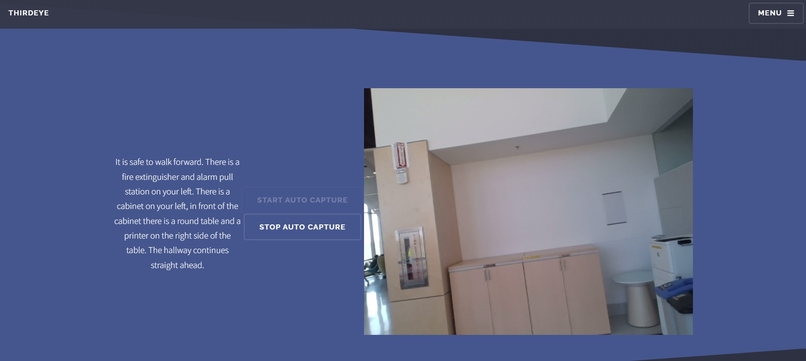

For Walking Assistant, ThirdEye can monitor the surrounding area through the camera and recognize potential danger for user (visual impaired people). It will give verbal instructions to help users avoid dangerous stuff in the surrounding environment in real-time.

For Searching Assistant, it will take in a picture and verbal instructions from users and help users to search for the things they need. For example, red-green color-blind individuals can use the app to find the stuff they want with specific colors. The app can also help users to find tiny objects in a large messy room pretty efficiently.

How we built it

We built ThirdEye using a combination of frontend and backend technologies. The frontend interface was developed using Javascript, HTML, and react etc. For the backend, we use python, flask and Gemini. We also implement adaptive video sampling based on the conversation complexity to provide better user experience. Gemini's multimodal capacity is the backbone of this project, which is mainly used to understand the verbal and visual information.

Challenges we ran into

Some of the challenges we encountered during the development of ThirdEye included integrating the Gemini API for image processing and ensuring seamless interaction between the app's various functionalities. Additionally, we faced challenges in optimizing the app's performance to ensure real-time processing of images and accurate interpretation of text.

Accomplishments that we're proud of

We are proud to have developed a comprehensive solution that addresses the needs of visually impaired individuals, as well as providing assistance to anyone requiring help with object recognition and organization. Our app's user-friendly interface and seamless integration of technologies make it a valuable tool for improving accessibility and independence.

What we learned

Through the development of ThirdEye, we learned how to effectively use Gemini API and leverage image processing as well as text-to-speech technologies to create accessible and user-friendly applications. We also gained valuable insights into the challenges faced by visually impaired individuals in their daily lives.

What's next for ThirdEye

We are planning to make it more powerful by further finetuning the model performance. We will also add more accessibility features to help our users better using the app.

Log in or sign up for Devpost to join the conversation.