Inspiration

Currently, there is a big communication gap between those who use ASL to communicate and the rest of the population. This leads to interactions where both parties seem helpless in getting their point across. Our application serves as a proof of concept of a real time ASL translator bridging the communication gap.

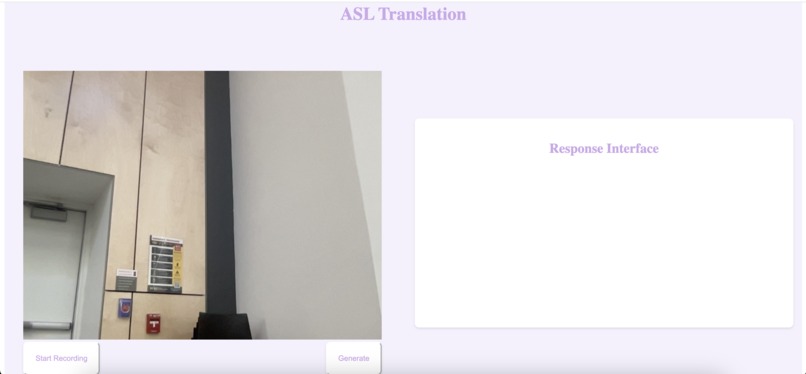

What it does

Our application allows users to record a video of themselves communicating a word or phrase in ASL. Then we parse the video and feed it to Gemini, which performs a translation from frames to text.

How we built it

We used a flask backend to interact with the Gemini API and a react frontend.

Challenges we ran into

Prompting Gemini and determining how many frames per second to send to the model were the biggest challenges.

Accomplishments that we're proud of

The model is able to detect very simple phrases.

What we learned

This task is difficult without the ability to finetune using images.

What's next for Gemini ASL Translator

Finetuning using images and translation into multiple languages.

Built With

- ai

- flask

- gemini

- javascript

- python

- react

Log in or sign up for Devpost to join the conversation.